What Is Website Indexing?

Basically, website indexing is a process of adding a site into a database by a search engine. Then, based on the information received, the engine evaluates the site quality and ranks it accordingly.

Indexing websites on Google is the first step towards their optimization for search engines. You cannot rank high if your site isn’t even indexed. Thus, there is a need to constantly track your website indexing for both new pages and existing content.

If you notice that a lot of pages were not indexed, find the potential reason for it and fix it. For instance, by violating Google’s guidelines, your site may lose indexing, so you’ll have to submit a re-inclusion request. This, naturally, will affect traffic and revenue, so keeping an eye of your site indexing is always important.

How to Check Website Indexing

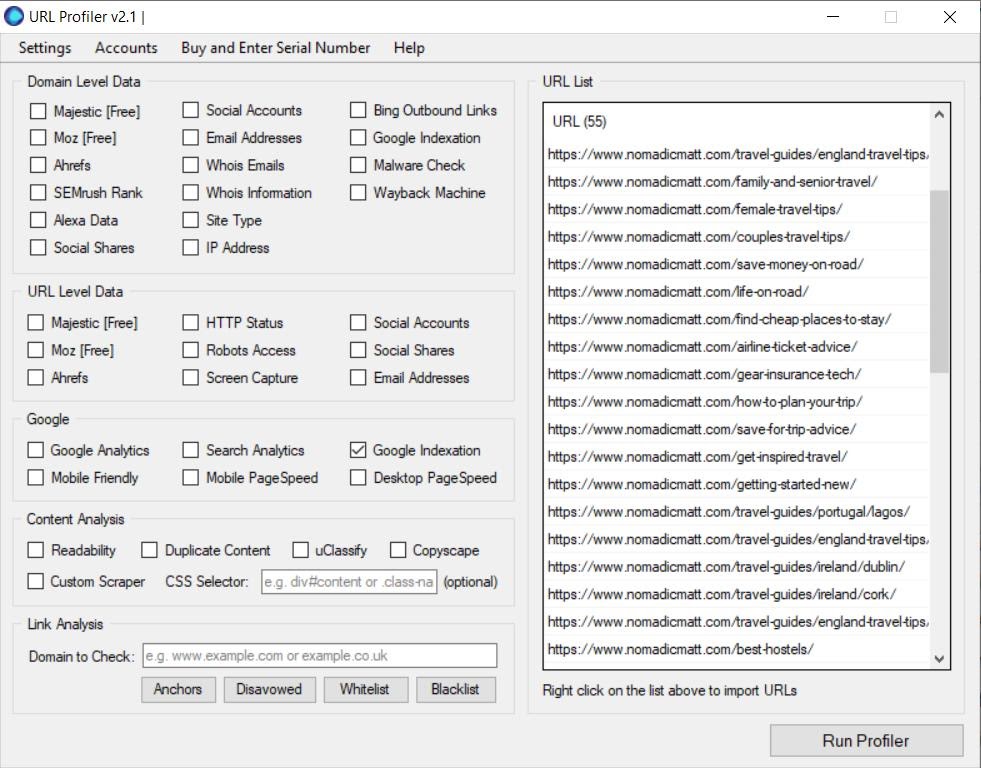

To check website indexing, use tools like Google Search Console, URL Profiler, or Google Index Checker tool.

First, you will have to create a list of URLs of your site pages, either manually or using special tools or plugins (such as Google XML Sitemaps). Then, enter URLs to the tool you choose. Below is an example of how to use URL Profiler:

By clicking “Run Profiler,” you’ll check if Google indexes your website. The program will generate a spreadsheet with indexed pages and errors, as you can see below:

While choosing the appropriate tool, take into consideration its price and features. There are different options for how to check index pages of the website:

- Free tools (Google Search Console),

- Pay per URL (SEranking.com),

- Monthly subscription (URL profiler).

How to Improve Your Website Indexing

The crawler will consider whether to index a page or not depending on its value for users. Thus, create meaningful pages and then check website indexing status on a regular basis. That will allow you to track the progress and notice problems at the early stages.

Request Indexing for a New Page

Let Google know about your new page by requesting its indexing. Such a request will work only for newly created pages and won’t help if Google has already visited them and did not index for some reason.

Before requesting for indexing, make sure to:

- Add internal links to the new page from the relevant and/or popular pages on your site

- Add quality backlinks to the new page from other resources

- Add the new page to the sitemap

Then, visit the Google Search Console and request indexing:

- Open the URL inspection tool

- Paste the link to the page you want to check

- Click on “Request indexing”

Check Existing Content

The content is the most important part of the website. If you have old pages that have not been indexed yet, that may indicate a problem. We have prepared a list of the most common content mistakes and will share tips on how to avoid them.

Backlinks

Quality backlinks tell Google that the page they refer to was highly appreciated by users. That does not mean though that pages without backlinks will never be indexed, it might just take longer.

Remember to keep an eye on where the links come from. Low quality sources may also affect indexing in a bad way. The same is true of mass links in blog comments, social media, etc. While thinking on how to get backlinks for your blog, it is better to focus on trustworthy and valuable resources.

Content

The content must be high-quality and unique. That is important not only for users, but also for search engines. The page with a low uniqueness article may be recognized by the crawler as useless and thus it won’t be indexed.

Divide your site pages for important and unimportant in terms of indexing. The latter can include technical or duplicate pages relevant for the website performance but not bringing any value to the user. If they get indexed, it can negatively affect your overall website ranking:

- It will slow down indexing of important pages.

- Important pages will get less weight in terms of SEO.

- Less trust to the site from search engines.

- If you have similar pages, an important page can be replaced by an unimportant one.

Loading Speed

According to Neil Patel, 75% of the visitors would leave the page if it is loading for more than three seconds. Page loading speed directly affects indexing and ranking. The faster the speed, the more useful the page for users. Moreover, crawlers have time limits, so they’ll index less pages on a website with slow speed.

Check your site loading speed with one of the following tools:

- PageSpeed Insights measures the loading speed, highlights weaknesses, and provides recommendations.

- Google Analytics also helps check your site speed. Click on “Behavior,” then “Site loading speed.” It also has integration with PageSpeed Insight.

- Serpstat Site Audit allows you to check loading speed for mobile and desktop devices. You’ll get a summary about errors that slow down the speed and recommendations for resolving them.

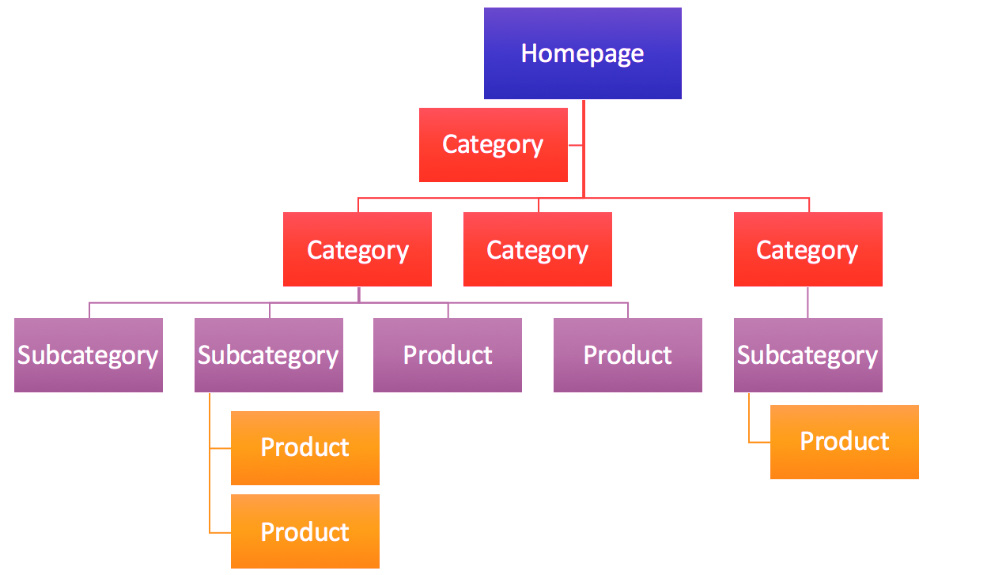

Page Nesting

Page nesting is another important point for website indexing. Basically, it is your website structure:

Pages located far from the main page may be omitted by crawlers. So, make sure that all your site pages are easily accessible.

There are two types of page nesting to take care of:

- Nesting by clicks. Check how many times the user needs to click to get to the page. If it is too deep in the structure, you may fix it by simplifying the latter. Ideally, all pages should be within three clicks from the home page.

- Nesting by URL. Crawlers don’t like long URLs, so make sure it is short. At the same time, when all pages are on the first level of nesting, website structure will also be suspicious for the crawler.

Bounce Rate

Bounce rate is the ratio of the users who visited more than one webpage to those who visited only one and left. Bounce rate varies from 10 to 90% depending on the niche. For example, online stores have a 20-40% bounce rate, while in the travel niche, it is about 37% on average.

Too high bounce rate may indicate certain problems such as:

- Non-compliance with the user request

- Poor design

- Abusive advertisement

- Inconvenient navigation

- Low page loading speed

- Problems with mobile-friendliness

Make sure to eliminate these problems to make visitors stay longer on your site.

Encoding

Encoding may become another core issue for crawlers as well as users. Crawlers determine the coding based on the information received from the server or webpage content. If there is an error in the encoding, the text might not be crawled.

Here are some reasons behind encoding errors:

- There is no tag <meta http-equiv = “Content-Type” content = “text / html; charset = encoding” />. Instead, encoding has to be written UTF-8, Windows-1251, or other depends on the encoding you are using on your website.

- Your coding differs from the one specified in <meta> (for example, the site is in UTF-8, and the encoding is Windows-1251).

- Htaccess files do not have an encoding configuration.

- Wrong encoding is registered on the server.

- Encoding of the database is different from the encoding of site scripts (when, for example, scripts, headers, etc. everything is in UTF-8, but the database is in Windows-1251 encoding).

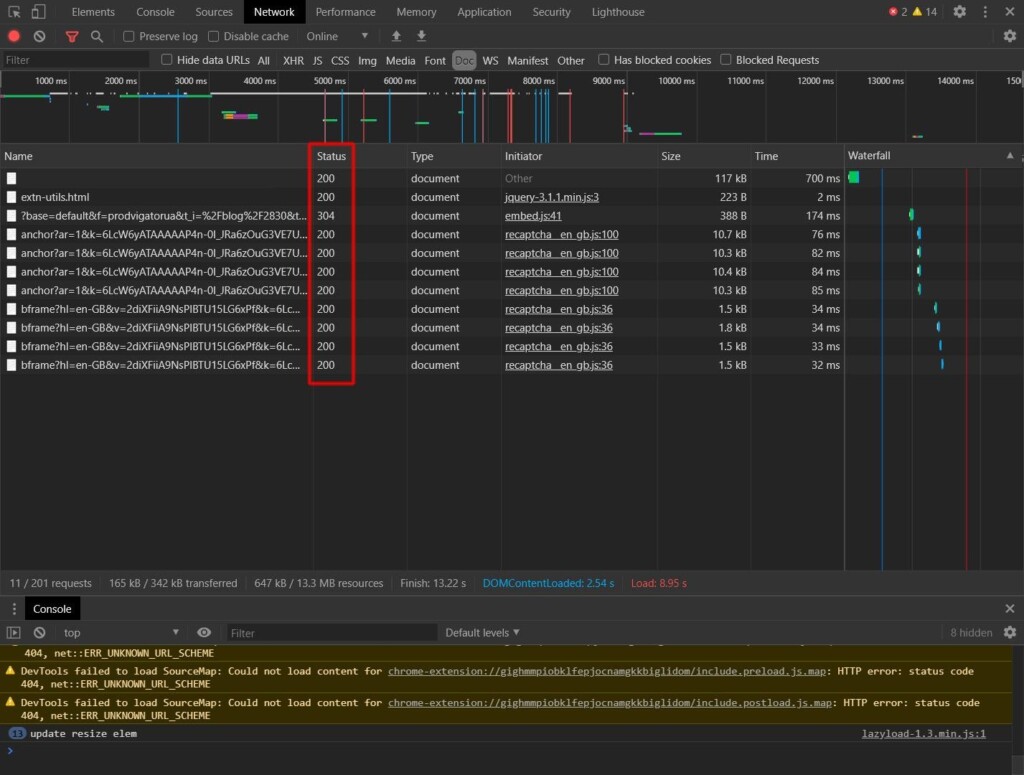

Server Response Code

A server response code is issued by the server upon a client’s request. There are different HTTP Response Status Codes, the most popular ones include:

- 200 – positive, meaning the page is successfully loaded.

- 301 – redirecting the visitor to another page.

- 404 – the page does not exist.

To check the response code you may use Audit Serpstat, ScreamingFrog, or Web Sniffer. Also, you may check the server response code right in Google Chrome. Press F12 then go to the Network tab and choose Doc. Refresh the page and look at the server response code in the Status column.

Sitemap

All important pages that you want to be indexed have to be included in the sitemap. So, there is a need to regularly update the sitemap file and conduct audits every 10-14 days. You may use Screaming Frog or any other tool to do a crawl on your sitemap.

Without doing an audit, you may miss a range of different problems, such as:

- Duplicate pages

- Replacing pages (example – /home instead of /, when they are identical)

- Irrelevant addresses, for instance, address of the page with http while you are using https.

- Incorrect URL of the page with www. when you are using pages without www.

- Redirect pages, 404 pages, or any other HTTP Response Status Codes which is different from 200.

Filters

There are a lot of filters Google uses to regulate indexing and ranking. Some of them have already become a part of automated algorithms, while others are working with the assistance of humans. In some cases, you may recognize that you get under the sanctions fast when you notice a message in a Google Search Console about your violations.

But in other cases, you may not even notice any problem at the early stage. That is why it is so important to track the performance and ranking of your website, for example, with one of the following tools:

- SEMRush Sensor helps track ranking positions

- Panguin SEO Tool helps check if your website was impacted by Google’s algorithm updates

- Google Penalty Checker helps check if your website was penalized by Google

Viruses

Virus infection may occur on your website in several ways:

- Your website was hacked by exploiting vulnerabilities

- The virus is transferred from the webmaster’s computer to the site via an FTP client

- Using plugins or add-ons from doubtful sources

- Placing advertising banners containing malicious code

Viruses obviously have a destructive effect on indexing and website performance. If any virus occurs on your website, you may be penalized by search engines and your hosting provider. Your website may get out of the index, the traffic will decrease, etc.

To solve this issue, you will have to find and delete the virus. Then you must contact the support team from Google Search Console and let them know that the issue was eliminated. Then there will be a need to request the hosting provider to unblock you. And finally, you have to request Google to index your website again. After all these steps, the website will start to slowly recover and gain traffic.

СRawl Blocks

Your website is optimized for search engines, but still isn’t indexed by Google? The problem may be in the robots.txt file. Check this file, go to websitename.com/robots.txt and check the code written there. Make sure you don’t have similar lines in the code:

- User-agent: Googlebot or just *

- Disallow: /

Such lines could impede page indexing. Delete these lines and save the file. You may also check that in Google Search Console using the URL inspection tool. Just paste the URL you need to check and click on the Coverage block, there you will see whether crawling is allowed or not.

How to Check the Google Indexing of a Website

Getting a website indexed by Google is simple, just make sure that your website is valuable for people. By doing that, you’ll avoid most problems with indexation.

Also, remember to check the existing content for indexing. Even if it was uploaded some time ago, no one is safe from indexing issues which could arise beyond your control. Thus, keeping an eye on the website indexing is an important step towards better ranking.